Uniting SQL and NoSQL for monitoring: Why PostgreSQL is the ultimate data store for Prometheus

How to use Prometheus, PostgreSQL + TimescaleDB, and Grafana for storing, analyzing, and visualizing metrics.

Jump to: Tutorial on how to use Prometheus + PostgreSQL + TimescaleDB.

If you ever needed a monitoring solution for your infrastructure then you’ve probably heard about Prometheus, an open-source community-driven monitoring system that also includes metrics collection and alerting.

In fact, the rise of Kubernetes has made Prometheus even more popular. If you look at their shared history, it’s easy to understand why. Google developed and open-sourced Kubernetes based on their decade-long experience with their own cluster scheduling system called Borg. Prometheus was initially developed at SoundCloud and heavily inspired by Borgmon, the internal monitoring system Google developed for Borg. In a way, Kubernetes and Prometheus originate from the same lineage, and are a perfect fit for each other. They also both happen to be 10-letter Greek words. (More on the history of Prometheus here.)

Prometheus is quite simple to start with. What makes Prometheus awesome is its unapologetic approach to solving monitoring in a simple and straightforward way. Its philosophy is to do one thing, and do it well. You can see this approach reflected in the PromQL language which is known for being powerful, yet simplistic.

However, Prometheus’ philosophy can also be limiting. To their credit, the developers of Prometheus foresaw that their product is opinionated, and built in extensibility to allow other systems to improve on it. In turn, Prometheus users often look to other systems as a way to augment their monitoring setup. This is where PostgreSQL comes in.

In particular, Prometheus users often turn to PostgreSQL for the following reasons:

- Scalable, durable, long-term data storage

- Operational ease with a broad tooling ecosystem

- SQL query power, flexibility, and “future-proofing”

Debunking monitoring misconceptions

This is where we typically hear a few objections:

- I thought PostgreSQL doesn’t scale for metrics?

- I thought PostgreSQL required schemas (and I don’t want to worry about schemas)?

- I thought PostgreSQL didn’t connect to Grafana?

- I thought SQL doesn’t work for analyzing metrics data?

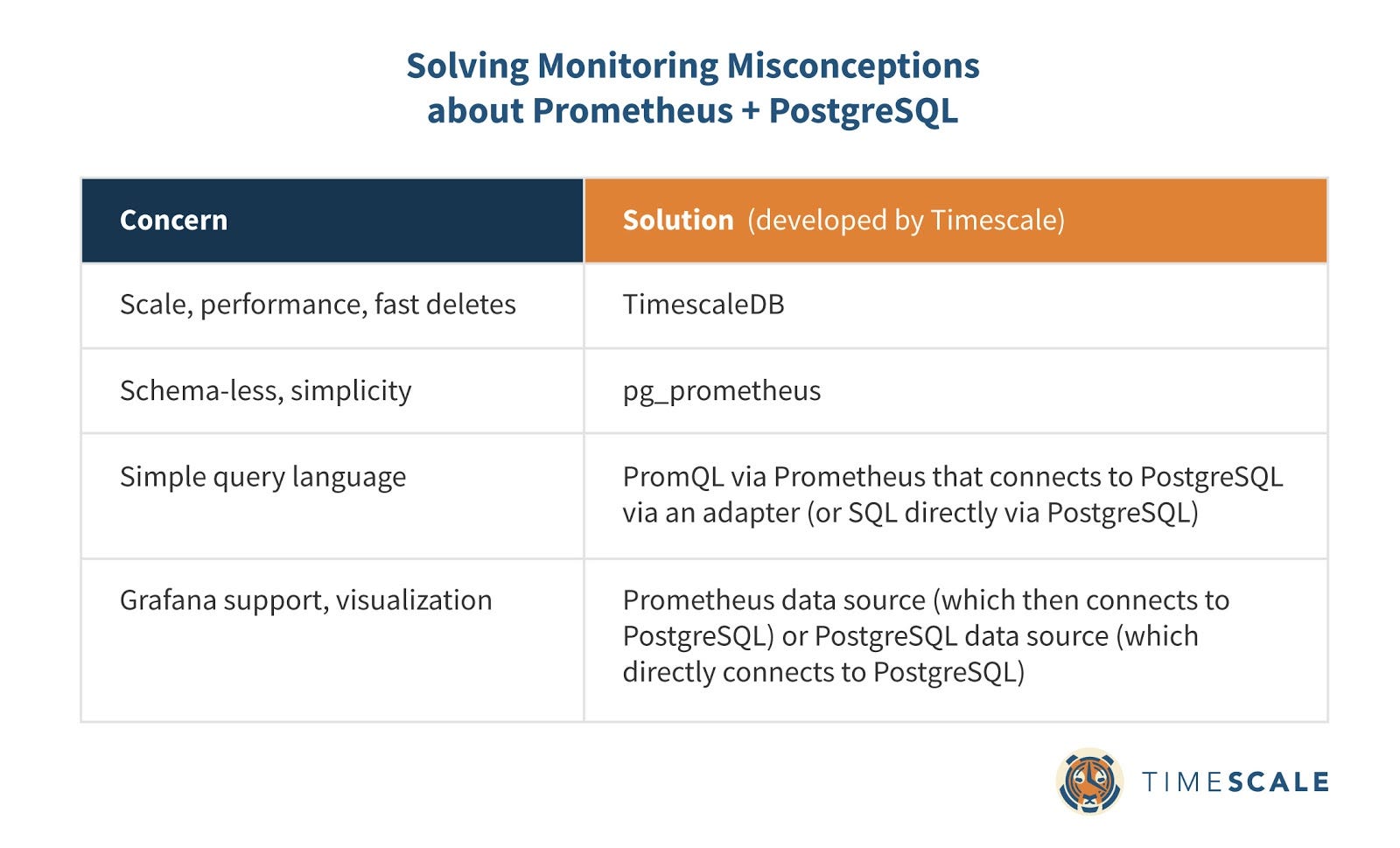

In this post we’ll discuss why these are actually misconceptions, and how new tools (many developed by TimescaleDB engineers) overcome these objections. But at a quick glance:

From this list, the biggest misconception we’ve encountered is that PostgreSQL can’t scale for metrics. This is a fallacy that has already been disproven by TimescaleDB.

TimescaleDB improves on PostgreSQL in a variety of ways: 20x higher inserts, 2000x faster deletes, 1.2x-14,000x faster queries. TimescaleDB also introduces new functions (e.g., time_bucket() for aggregating by arbitrarily-sized time periods) that are necessary for metrics analysis. It does this while still looking and feeling like PostgreSQL, e.g., by still supporting all PostgreSQL commands and full SQL.

(Note that TimescaleDB is packaged as a PostgreSQL extension, not a fork. For more information: How TimescaleDB works.)

Now, let’s get back to why PostgreSQL (and TimescaleDB) is the ideal long-term store for Prometheus.

Scalable, durable, long-term data storage

In order to keep Prometheus simple and easy to operate, its creators intentionally left out some of the scaling features one would normally expect. The data in Prometheus is stored locally within the instance and is not replicated. Having both compute and data storage on one node may make it easier to operate, but also makes it harder to scale and ensure high availability.

As a result, Prometheus is not designed to be a long-term metrics store. From the Prometheus documentation:

[Prometheus] is not arbitrarily scalable or durable in the face of disk or node outages and should thus be treated as more of an ephemeral sliding window of recent data.

This means that if you want to store your Prometheus data in a scalable, durable way for more analysis, you will need to complement Prometheus with a time-series database (since metrics data is time-series data).

Traditionally, PostgreSQL has had scalability challenges that have prevented its use as a metrics store. However, thanks to TimescaleDB, PostgreSQL can now easily handle terabytes of data in a single table (as we discussed earlier).

PostgreSQL also supports high availability and replication, further making it a good fit for long term data storage. In addition, it provides advanced capabilities and features, such as full SQL, joins, even geospatial support via PostGIS, which is simply not available in Prometheus.

So, how does PostgreSQL integrate with Prometheus to store data? All the metrics that are recorded into Prometheus are first written to the local node and then written to PostgreSQL (more information below). This means that all of your metrics are immediately backed up, so that any disk failure on a Prometheus node will be less painful.

PostgreSQL can also store other types of data (metrics, relational, JSON), or even other sources of time-series data (via TimescaleDB) allowing you to centralize your monitoring data from different sources and simplify your stack. You can even join those different types of data and add context to your monitoring data for richer analysis.

Since one needs TimescaleDB to store and properly analyze metrics data in PostgreSQL, from here on we’ll talk about TimescaleDB, with the full understanding that TimescaleDB looks and feels just like PostgreSQL.

Operational ease with a broad tooling ecosystem

There are several storage options for Prometheus. However, the challenge with all of them is that they will likely require your team to operate and manage yet another system.

TimescaleDB is an exception. It operates just like PostgreSQL, which means that teams who already have PostgreSQL experience can re-use that knowledge for their TimescaleDB Prometheus storage. TimescaleDB also inherits the broad PostgreSQL ecosystem of tooling, management, connector, and visualization options like Kafka, Apache Spark, R/Python/Java/etc, ORMs, Tableau, etc.

In particular, given the decades of development invested in streamlining PostgreSQL, it (and TimescaleDB) is often (and perhaps surprisingly) more resource efficient than other newer data stores that were specifically built from the ground up for metrics. As one Prometheus user recently told us:

Being able to use the lighter-weight and scalability features of PostgreSQL are the big thing. In comparison, another NoSQL store we tried was a memory hog. The future of being able to do more granular queries is great, too.

Other operational improvements relate to how Prometheus works. The only way to scale Prometheus is by federation. However, there are cases where federation is not a good fit: for example, when copying large amounts of data from multiple Prometheus instances to be handled by a single machine. This can result in poor performance, decreased reliability (an additional point of failure), duplicated data, or even loss of data. These are all the problems you can “outsource” to TimescaleDB.

SQL query power, flexibility, and “future-proofing”

PromQL is the Prometheus native query language. It’s a very powerful and expressive query language that allows you to easily slice and dice metrics data and apply a variety of monitoring-specific functions.

For example, here is a query that is perhaps better expressed in PromQL than SQL:

Max CPU frequency by CPU core in the last 5 minutes:

PromQL:

max(max_over_time(node_cpu_frequency_hertz[5m])) by (cpu)SQL:

SELECT labels->>'cpu', MAX(value)

FROM metrics

WHERE name='node_cpu_frequency_hertz'

AND time > NOW() - interval '5 min'

GROUP BY labels->>'cpu';However, there may be times where you need greater query flexibility and power than what PromQL provides. For example, when:

- Trying to cross-correlate metrics with events and incidents that occurred

- Joining metrics data with other data sources (e.g., infrastructure inventory or weather data)

- Running more granular queries for active troubleshooting

- Connecting to other visualization tools like Tableau

- Connecting to Python/R or Apache Spark to apply machine learning or other forms of deeper analysis on metrics

For example, here is a query that is more naturally expressed in SQL:

Check whether system performance was impacted by a Linux kernel update

PromQL: Hard to do.

In PromQL, this would be hard to do, unless this information (e.g., live kernel update history) was already stored in Prometheus. Considering the problems Prometheus has with high-cardinality datasets, this would require storing data in a normalized way. Even then, adding external metadata to Prometheus can be cumbersome, while in SQL it would be just an insert. Also, in many cases, storing external metadata in Prometheus may not be feasible, or in the case of certain types of data (e.g., weather, user, business) may not even make sense.

SQL:

SELECT time_bucket('1 hour', m.time) AS hour_bucket,

m.labels->>'host', h.kernel_updated, AVG(value)

FROM metrics m LEFT JOIN hosts h on h.host = m.labels->>'host'

AND time_bucket('1 hour', m.time) = time_bucket('1 hour', h.kernel_updated)

WHERE m.name='node_load5' AND m.time > NOW() - interval '7 days'

GROUP BY hour_bucket, m.labels->>'host', h.kernel_updated

ORDER BY hour_bucket;In this case, we are using an additional table containing information about Linux kernel updates per host, and then easily joining that data with metrics from Prometheus to figure out if maybe some kernel patch decreases system performances.

Sometimes you just want to “future-proof” your system such that it will support not only the queries you want to run today, but also what you’d like to run in the future.

This is where the full SQL support of TimescaleDB can be of great help, allowing you to apply the full breadth of SQL to your Prometheus data, joining your metrics data with any other data you might have, connect to other data analysis and visualization tools, and run more powerful queries. (Some more examples here.)

Prometheus + TimescaleDB + PostgreSQL = Better together

By using Prometheus and TimescaleDB together, you can combine the simplicity of Prometheus with the reliability, power, flexibility, and scalability of TimescaleDB, and pick the approach that makes most sense for the task at hand. In particular, it is because Prometheus and TimescaleDB are so different that they become the perfect match, with each complementing the other. For example, as mentioned earlier, you can use either PromQL or full SQL for your queries, or both.

To make this work there are two components (both developed by TimescaleDB):

1. The Prometheus PostgreSQL Adapter

2. A TimescaleDB database with pg_prometheus

The adapter is basically a translation proxy that Prometheus uses for reading and writing data into PostgreSQL/TimescaleDB. And the adapter has a dependency on the pg_prometheus PostgreSQL extension, which takes care of writing the data in most optimal format for storage and querying within PostgreSQL/TimescaleDB.

In particular, pg_prometheus implements the Prometheus data model for PostgreSQL. That means you can write Prometheus data directly to PostgreSQL/TimescaleDB using the familiar exposition format, even without Prometheus. (In other words, it ensures that you don’t have to worry about schemas.)

We’ve actually created a step-by-step tutorial to walk Prometheus users through this processes which you can find here.

Bonus! Using the TimescaleDB data source with Grafana

We also know that Grafana is another popular platform for analytics and monitoring. But until recently, databases supported by Grafana have been NoSQL systems, offering restrictive SQL-like or custom query languages that were limited in scope, and designed for specific data model and architectures in mind. Not anymore.

Timescale engineer Sven Klemm built a PostgreSQL data source to use with Grafana to query TimescaleDB directly to visualize your Prometheus data. This is often recommended for users who prefer familiarity and/or an easier query language. In this case, by connecting Grafana to Prometheus, you still get access to TimescaleDB indirectly through Prometheus as the long-term store of your data. Using this method, Prometheus works more like a cache. If it doesn’t already have the requested information cached, Prometheus will fetch the data from TimescaleDB, cache it, and return the result.

Yet soon we will be able to do more. We’re in the process of building a better query editor for TimescaleDB in Grafana, to make it much easier to explore the available time-series data and generate more of these queries automatically.

The TimescaleDB query builder for Grafana will look something like this:

Stay tuned for our step-by-step tutorial on how to use the Grafana query editor. But for now, we encourage you to take the time to read the tutorial on Getting started with Prometheus, PostgreSQL, and TimescaleDB.

(Update: Visit Grafana’s blog to learn how to make time-series exploration easier with the PostgreSQL/TimescaleDB query editor.)

Next steps

- Follow the tutorial: Getting started with Prometheus, PostgreSQL, and TimescaleDB

- Read more of our blog posts: High availability in PostgreSQL, How to scale PostgreSQL 10 using table inheritance and declarative partitioning

- Read our benchmarks: TimescaleDB vs. PostgreSQL, PostgreSQL 10, Cassandra, MongoDB

Like this post? Please recommend and/or share.

Want to learn more? Join our Slack community and check out our GitHub.